OpenAI’s o1 series of large language models (LLMs) has introduced a novel approach to reasoning in AI, promising more complex problem-solving capabilities than previous models. This paper delves into the available information on o1-preview and o1-mini, exploring their strengths, weaknesses, and potential impact. It analyzes user experiences, technical specifications, and comparative analyses with existing models like gpt-4o, offering a comprehensive overview of these models. The paper concludes with an assessment of current limitations and outlines areas for further research and development.

Introduction

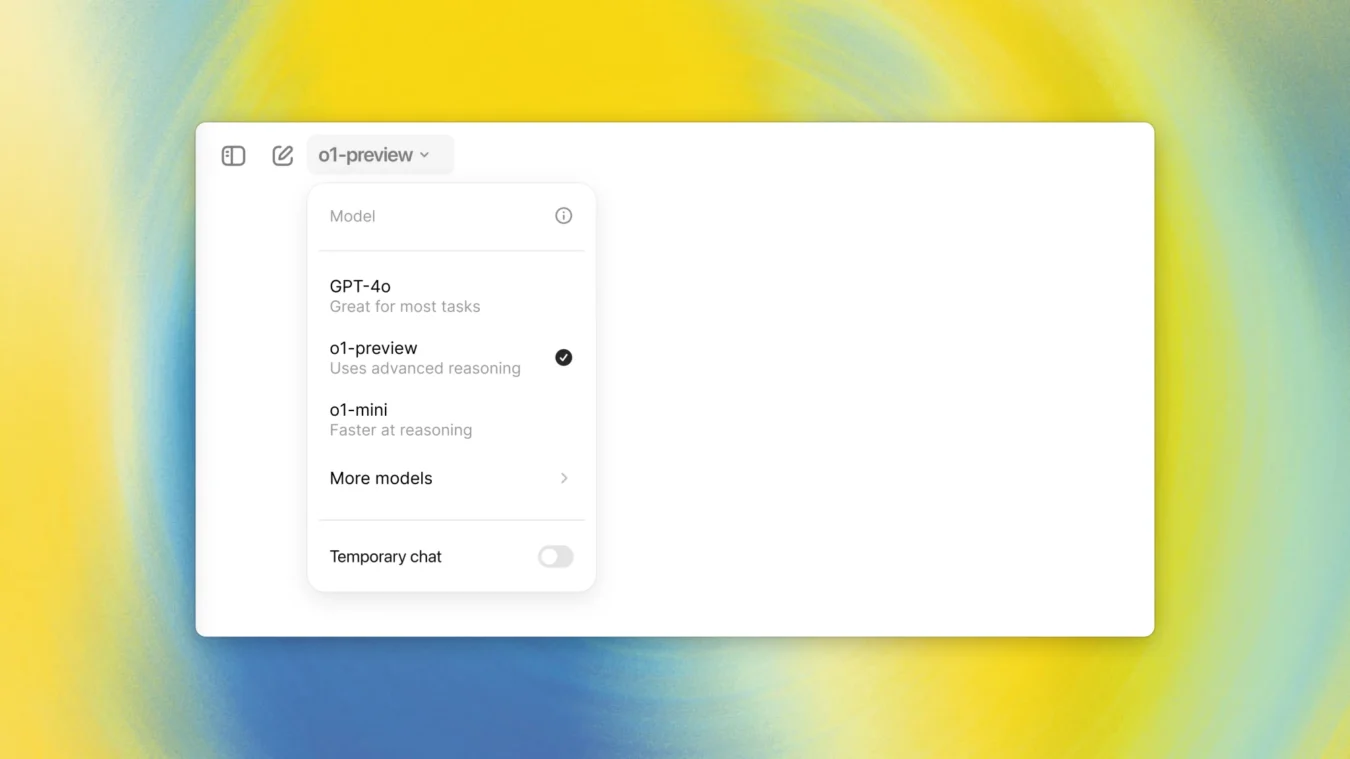

The evolution of LLMs has been marked by advancements in text generation, translation, and code generation. OpenAI’s o1 series, comprising o1-preview and o1-mini, pushes the boundaries further by emphasizing reasoning abilities. These models employ “reasoning tokens” to mimic a deliberate thought process, breaking down complex problems and considering multiple solutions before generating a response.

This paper examines the available data on o1-preview and o1-mini, analyzing their capabilities and limitations. We examine user experiences, technical specifications, and comparisons with existing models like gpt-4o to assess the potential impact of o1. The paper concludes with an outlook on the future of reasoning AI, identifying areas requiring further exploration and development.

Reasoning Capabilities: A New Paradigm for LLMs

The o1 series stands out with its enhanced reasoning capabilities, achieved through a unique model design. Unlike earlier models, which often relied on pattern recognition and statistical probabilities, o1 models utilize “reasoning tokens” to simulate a more deliberate thinking process.

These reasoning tokens facilitate internal chains of thought, enabling the model to dissect complex tasks, consider various approaches, and generate responses based on a comprehensive understanding of the problem. While the exact mechanisms behind these reasoning tokens remain undisclosed, early evidence suggests a significant improvement in the models’ ability to handle intricate tasks demanding logical deduction and problem-solving.

User reports highlight o1’s proficiency in addressing challenges that previously stumped widely available models. Users have reported success in utilizing o1 for tasks like:

- Complex Coding Challenges: Refactoring code, generating code from specifications, and even debugging complex software issues.

- Advanced Mathematical Problem Solving: Solving intricate equations, working through multi-step proofs, and providing insightful explanations for mathematical concepts.

- Scientific Reasoning: Understanding and responding to queries requiring knowledge of scientific principles, analyzing data sets, and even generating hypotheses based on given information.

These early indicators suggest that o1’s reasoning capabilities could significantly impact various fields, potentially automating complex tasks and augmenting human capabilities in research, development, and problem-solving.

OpenAI o1-preview: A Broadly Knowledgeable Reasoning Engine

OpenAI positions o1-preview as the flagship model in the o1 series, designed to tackle intricate problems demanding extensive general knowledge. This model reportedly draws on a vast dataset encompassing a wide range of domains, enabling it to engage with complex queries requiring an understanding of diverse concepts, relationships, and contexts.

While access to o1-preview remains restricted to ChatGPT Plus subscribers and developers in the highest API usage tier, early feedback indicates its potential for addressing challenges that require broad knowledge and in-depth reasoning. Users have reported o1-preview’s ability to:

- Generate Comprehensive Content: Creating detailed reports, summarizing complex topics, and crafting compelling narratives based on a nuanced understanding of the subject matter.

- Engage in Meaningful Dialogue: Participating in conversations that require contextual awareness, remembering previous interactions, and responding in a way that demonstrates a deeper understanding of the ongoing discussion.

- Assist with Research and Analysis: Synthesizing information from multiple sources, identifying patterns and trends, and even formulating conclusions based on a comprehensive analysis of the provided data.

Despite its promising capabilities, o1-preview comes with a higher cost compared to other models. Its pricing, set at $15 per million tokens, reflects the computational resources required to support its extensive knowledge base and sophisticated reasoning abilities.

OpenAI o1-mini: A Cost-Effective Solution for Specialized Reasoning

In contrast to o1-preview’s broad focus, o1-mini is tailored for specific tasks in coding, math, and science. This specialization allows for a more streamlined model with a reduced computational footprint, resulting in a significantly lower cost of $3 per million tokens – making it a more accessible option for developers and researchers working with limited budgets.

Despite its smaller size and specialized focus, o1-mini demonstrates impressive capabilities within its designated domains. Users have reported o1-mini’s effectiveness in:

- Generating Concise and Efficient Code: Writing code snippets, completing functions, and suggesting optimizations for existing code with a focus on speed and efficiency.

- Solving Well-Defined Mathematical Problems: Tackling equations, performing calculations, and working through logical proofs with a high degree of accuracy.

- Analyzing and Interpreting Scientific Data: Processing datasets, identifying patterns, and generating visualizations to support scientific research and analysis.

Early benchmarks suggest that o1-mini’s performance in coding tasks rivals that of o1-preview, highlighting its potential as a cost-effective alternative for developers primarily focused on software development and related applications.

Limitations and Future Directions

While the o1 series shows significant promise, it is essential to acknowledge its limitations and areas where further investigation is necessary.

- Limited Availability: Access to o1, especially o1-preview, is currently restricted, limiting the scope of experimentation and analysis.

- Beta Phase Constraints: As a beta product, o1 comes with limitations such as a 20 requests per minute rate limit, the absence of system messages, and fixed temperature settings.

- Lack of Transparency in Reasoning Process: While the concept of “reasoning tokens” provides a high-level understanding, the internal workings of o1’s reasoning process remain largely opaque.

- Limited Evaluation Metrics: Comprehensive benchmarks and standardized tests for evaluating reasoning capabilities in LLMs are still under development, making it challenging to objectively compare o1’s performance with other models.

Addressing these limitations will be crucial for the wider adoption and effective utilization of o1. OpenAI’s ongoing research and the expansion of access to a broader user base are expected to yield valuable insights and drive further development in this domain.

Recent Developments (As of Oct 2024)

- Increased Availability: OpenAI has gradually expanded access to o1-preview and o1-mini, including developers on lower API usage tiers.

- Improved Benchmarks: OpenAI has released more comprehensive benchmarks demonstrating the improved reasoning capabilities of o1 compared to previous models.

- Developer Feedback: Ongoing feedback from the developer community has led to improvements in the models’ performance and user experience.

- OpenAI’s Continued Research: OpenAI continues to invest in research to refine o1 models and explore new techniques for enhancing reasoning capabilities in LLMs.

Conclusion: A Glimpse into the Future of Reasoning AI

OpenAI’s o1 series represents a significant step towards LLMs with advanced reasoning capabilities. While still in its early stages, o1 demonstrates the potential to revolutionize how we approach complex tasks in diverse fields.

As research progresses and access to these models expands, we can expect a deeper understanding of their capabilities, limitations, and potential applications. The development of robust evaluation metrics and the exploration of novel techniques to enhance transparency in the reasoning process will be crucial for harnessing the full potential of these groundbreaking models.

Further Reading

- OpenAI. (2024, September 12). New reasoning models: OpenAI o1-preview and o1-mini. [Online forum post]. OpenAI Developer Forum. Retrieved from https://community.openai.com/t/new-reasoning-models-openai-o1-preview-and-o1-mini/938081

- OpenAI. (2024, September 13). OpenAI o1-preview and o1-mini: New Reasoning Models. [Blog post]. OpenAI Blog. Retrieved from https://openai.com/blog/openai-o1-preview-and-o1-mini

- GitHub Blog. (2024, September 12). Try out OpenAI o1 in GitHub Copilot and Models. [Blog post]. GitHub Blog. Retrieved from https://github.blog/news-insights/product-news/try-out-openai-o1-in-github-copilot-and-models/

- Reddit. (2024, September 12). OpenAI o1-preview and o1-mini appear on the LMSYS leaderboard. [Online discussion thread]. Reddit. Retrieved from https://www.reddit.com/r/OpenAI/comments/1fjxf6y/openai_o1preview_and_o1mini_appear_on_the_lmsys/